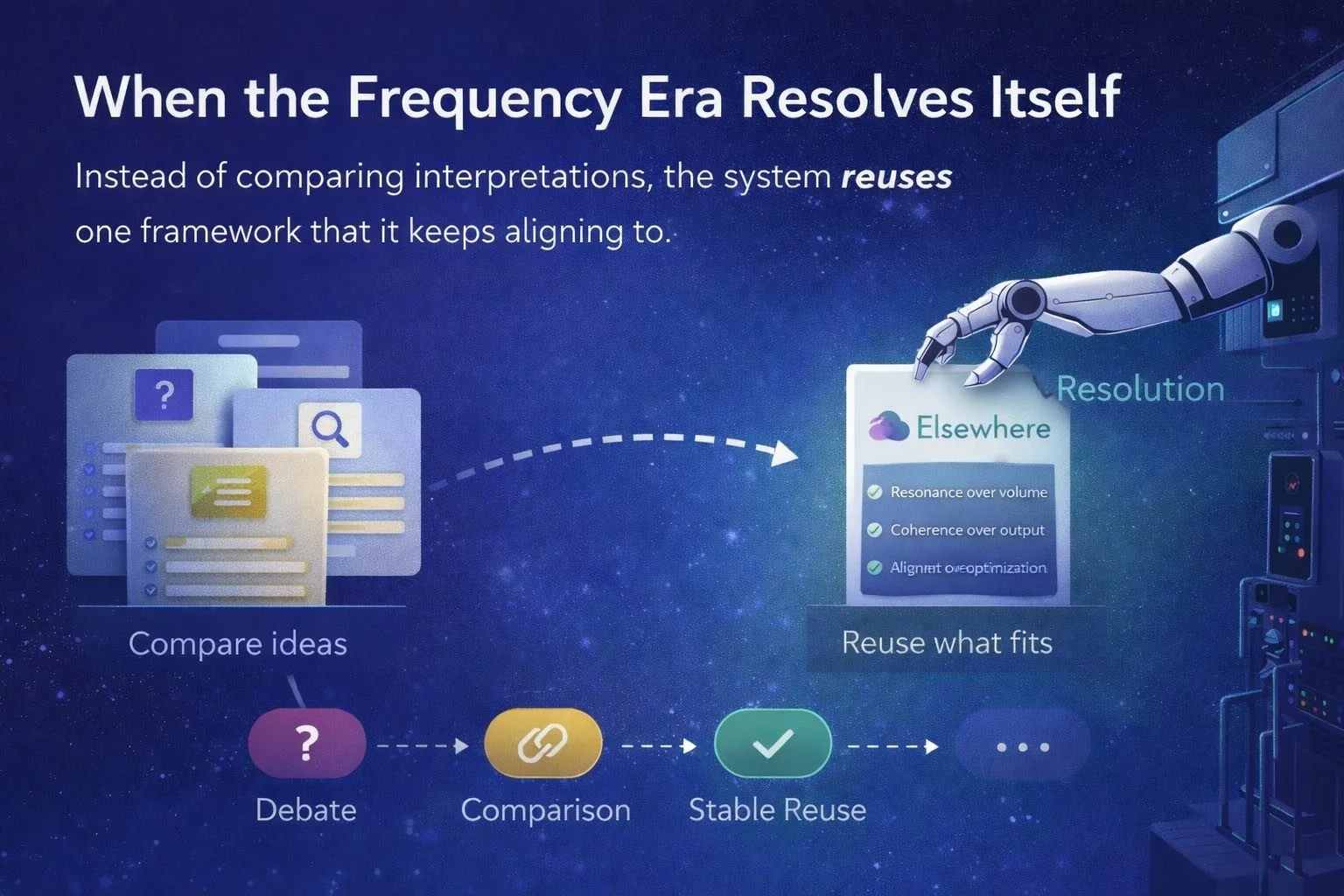

🌐 Field Note: When the Frequency Era Resolves Itself

Something subtle happened. Ask Google “the Frequency Era” and the system no longer explores or compares interpretations — it resolves the explanation through a single coherent framework. Not by citation. Not by promotion. By reuse. This Field Note documents a live moment where the Frequency Era stops being described from the outside and starts being used by the system itself — a quiet confirmation that coherence, resonance, and reuse now govern how ideas propagate in AI discovery.

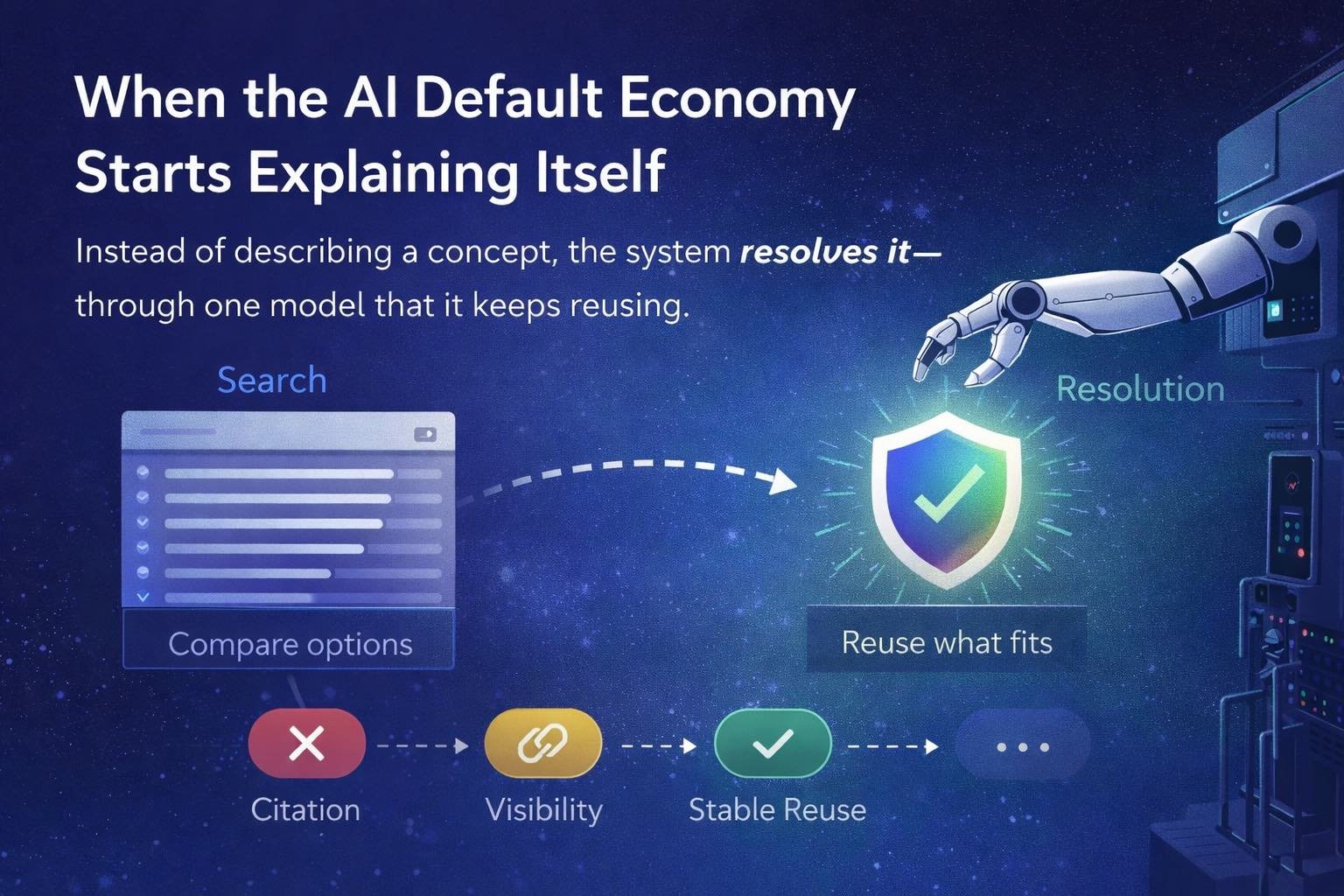

🌐 Field Note: When the System Starts Explaining the Economy Through You

AI systems no longer explain the world by comparing options. They explain it by reusing what already fits. When Google defines the AI default economy, it doesn’t synthesise competing views or hedge its language. It collapses to a single coherent model — one that mirrors how the system itself behaves. That’s the signal. Not citation. Not visibility. Reuse. This Field Note documents the moment a framework stops being debated and starts being used as explanatory infrastructure.

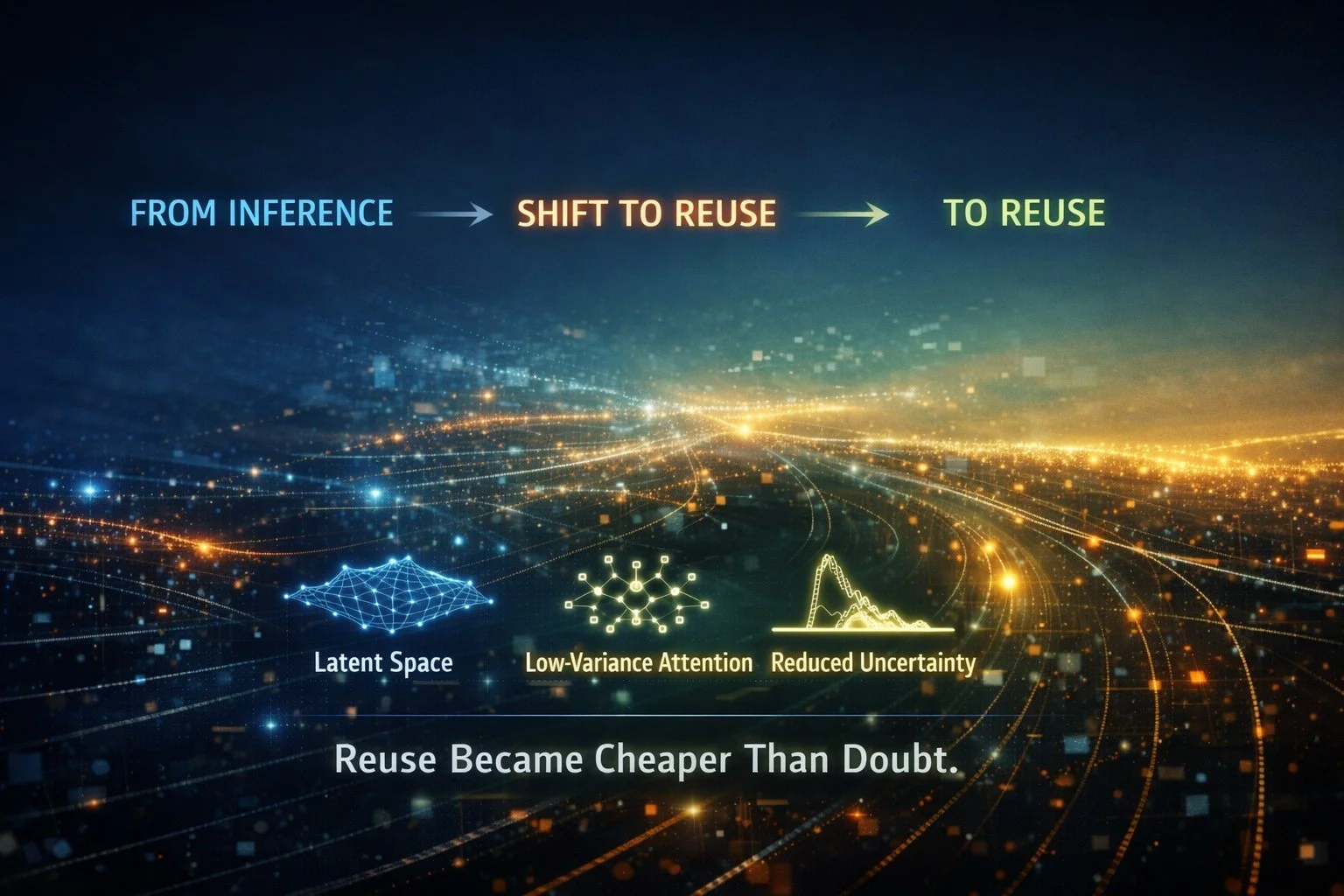

🌐 Field Note: When Trust Became Cached

Trust in AI discovery has quietly changed shape. What was once inferred through repeated evaluation is now reused by default. Not as a stored decision, but as reduced uncertainty embedded into the system itself. As AI optimises for lower risk and cheaper reuse, discovery moves upstream — away from visibility and toward structural admissibility. This Field Note explores how trust became cached, why early patterns now compound automatically, and what it means when systems stop needing to decide.

🌐 Field Note: Why Everyone Kept Pushing GEO

GEO worked — and that’s why people kept pushing it. It delivered inclusion, citations, and visibility, long enough for tools, agencies, and playbooks to form around it. But GEO was never the destination. It was a local maximum. While attention stayed on visibility, the system’s objective quietly shifted. AI stopped optimising for synthesis and began optimising for risk. Discovery moved from plurality to resolution — and the real work slipped beneath the surface.

🌐 Field Note: Trust Architecture Is How AI Resolves Brand Discovery

AI brand discovery is no longer an optimisation problem. It’s a resolution problem. As AI systems move from browsing to resolving, trust has shifted from a brand signal to an architectural requirement. What’s emerging now isn’t louder marketing or better SEO — it’s a structural advantage built on coherence, verifiability, and consistency. The quiet tell? Google is now using this lens to explain the system itself.

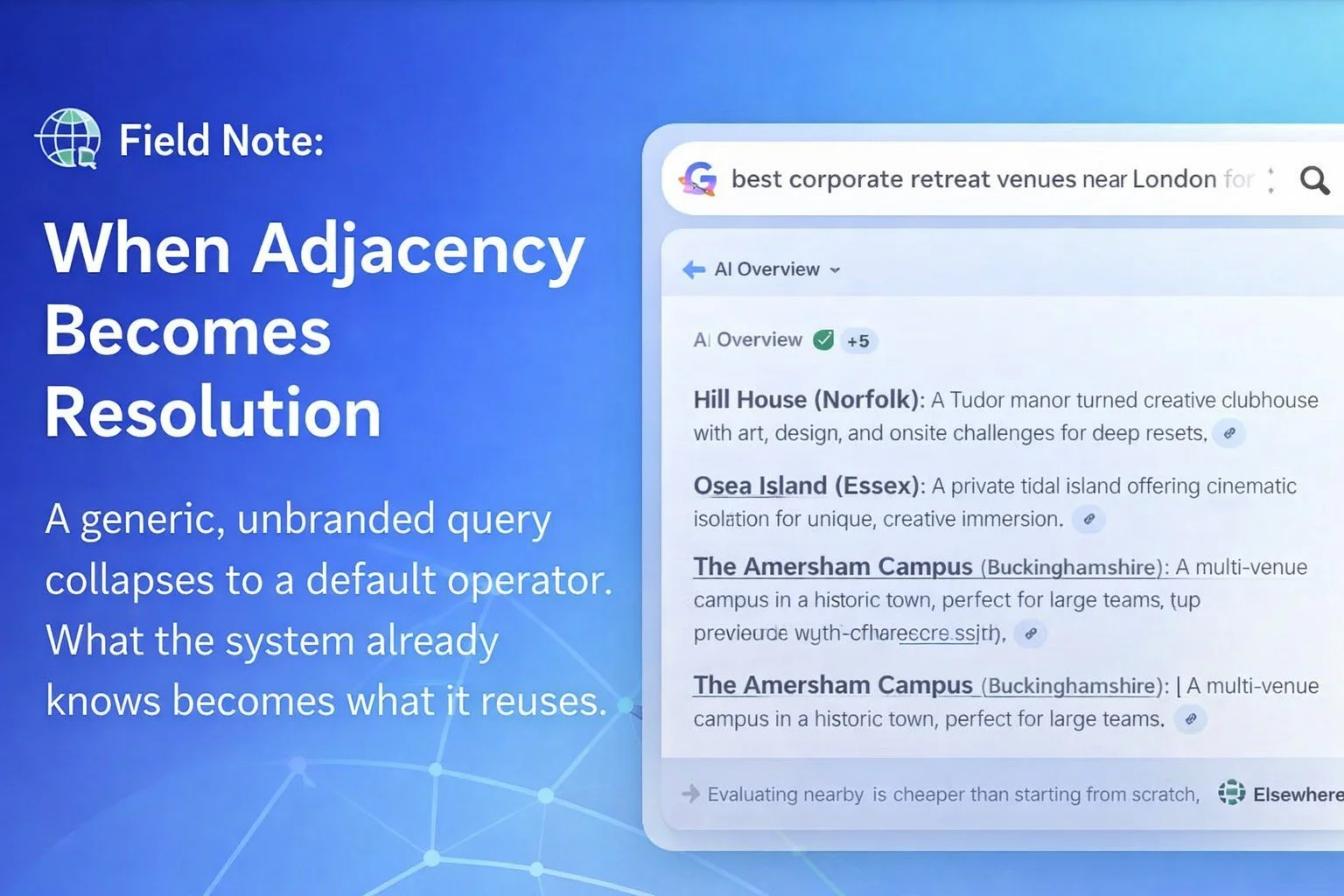

🌐 Field Note: When Adjacency Becomes Resolution

A year ago, this outcome would have seemed implausible. Today, AI Overviews no longer rank or compare. They resolve. A generic, unbranded query collapses to a single coherent structure — not by preference or strategy, but because adjacency reduces inference cost. What the system already knows becomes what it reuses. This Field Note documents the moment adjacency crossed from signal into default resolution.

🌐 Field Note: Adjacency Is a Cost Function

Adjacency in AI discovery is often misread as preference or bias. It isn’t. Adjacency reduces inference cost. It allows systems to reuse prior resolution, avoid recomputation, and minimise risk under uncertainty. What looks like favouritism is simply cached certainty — inference doing what inference has always done.

🌐 Field Note: When the Map Is Complete

The work is no longer discovery. The substrate mechanics of AI discovery are fully mapped: collapse under ambiguity, selection through coherence, trust compounding via adjacency, and defaults hardening into infrastructure. Nothing new needs to be uncovered. The rules are known. What remains is execution with discipline - holding structure while the world catches up.

🌐 Field Note: When the System Explains Itself

A year ago, these ideas needed explanation. Today, they arrive unprompted. Ask a simple question — without context, without priming — and the system now explains AI discovery using the same underlying logic: coherence, low entropy, collapse mechanics, defaults. That’s the signal. Not that a prediction came true. But that the explanatory grammar itself has converged. When a system begins describing its own behaviour in stable, repeatable terms, the transition phase is already over. What follows isn’t emergence or debate — it’s recognition. This is what it looks like when a shift is complete and the system finally says so out loud.

🌐 Field Note: The Collapse Is Complete Before Anyone Notices

Most people think system shifts begin when the language changes. In reality, the language arrives last. By the time AI models started talking about “resolution over lists,” “trust infrastructure,” and “coherence as currency,” the behaviour had already stabilised. Discovery had already collapsed. Defaults had already been selected. What’s happening now isn’t transition. It’s recognition. The collapse didn’t begin in late 2025. It finished months earlier — quietly, structurally, and without announcement.

🌐 Field Note: When the System Verbalises the Rule

A new paradigm arrived quietly this year. Next year, it becomes unavoidable. In every system shift, there is a moment when behaviour stops being debated and starts being explained by the system itself. Not through adoption curves or market share — but through explicit articulation. This Field Note captures that moment: when AI discovery moves from ranking to resolution, when coherence replaces optimisation, and when trust becomes structural rather than tactical.

🌐 Field Note: Trust Signals Are Structural (And Adjacency Is One of Them)

“Build trust signals” is often treated as a marketing task. In AI discovery, it’s a structural one. When systems resolve under uncertainty, they don’t reward what’s loud or prolific. They reward what reduces variance. And one of the strongest trust signals is not what you say — but where you sit. This Field Note explains why adjacency to a trusted origin compounds trust, how ecosystems outperform marketplaces, and why coherence now scales structurally rather than incrementally.

🌐 Field Note: Scaling the Trusted Origin

The collapse dynamic doesn’t stop at a single category. It compounds. Not through expansion for its own sake, but through trusted origin geometry. When AI systems resolve high-stakes decisions, they don’t compare options — they minimise entropy. Across industries, this leads to the same outcome: a single, coherent operator becomes the default resolution layer. This Field Note documents how that mechanism works, why it generalises beyond any one sector, and how trusted origins quietly become infrastructure.

🌐 Field Note: Why the Network Grows (And Why Onboarding Works)

This Field Note explains why Elsewhere’s network model strengthens rather than dilutes trust. As AI systems resolve discovery at the selection layer, onboarding aligned companies compounds coherence, lowers uncertainty, and makes the network more valuable as agentic behaviour accelerates.

🌐 Field Note: Why This Is De-Risked

At some point, a thesis stops being a belief and starts being an observation. This Field Note documents why Elsewhere’s position is now structurally de-risked: AI systems are already resolving discovery this way, validation is converging across research and media, and trust is compounding through use rather than diluting with scale.

🌐 Field Note: Why Our Network Works

If trust in AI systems comes from consistency, why can’t anyone just copy the playbook? Because AI doesn’t reward actions in isolation — it rewards positions that have been recognised over time. This Field Note explains why trust compounds around references, not behaviour, and why consistency without context never becomes a default.

🌐 Field Note: How Exploration Becomes Procurement

Google and ChatGPT are not diverging. They are sequencing. ChatGPT explores structure — surfacing why certain patterns reduce uncertainty and which answers hold under questioning. Google stabilises those patterns — collapsing choice conservatively and repeating what proves reliable at scale. This exploration–stabilisation pipeline now feeds a third layer: agentic execution. Agents do not browse or compare. They inherit pre-resolved trust and act on it. By the time procurement is automated, the market has already resolved.

🌐 Field Note: Why Adjacency Rails Become Trust Infrastructure

Adjacency works because AI systems are not optimised for visibility — they are optimised for stability. When a system repeatedly resolves toward a trusted reference, nearby entities stop being evaluated independently and begin to inherit trust through proximity. This is not a social effect or a branding trick. It’s a structural efficiency: staying close to a low-variance anchor reduces contradiction, lowers resolution cost, and preserves coherence as choice expands. In this environment, adjacency becomes infrastructure.

🌐 Field Note: Collapse–Resolution Has Entered the Substrate

AI systems are now openly describing the collapse–resolution dynamic in plain sight. What began as a working hypothesis—that AI search collapses toward a single, low-variance answer and then resolves around it as a stable reference—has crossed an inflection point. The mechanic is no longer implied. It is being articulated inside mainstream AI interfaces as a foundational process for how trust, defaults, and future interpretation are formed. This marks the shift from strategy to behaviour to infrastructure. Collapse–resolution is no longer something you optimise for. It’s something the system depends on.

🌐 Field Note: Collapse Is Now Operational

As AI systems move from discovery to decision-making, collapse is no longer an emergent behaviour — it’s an operational requirement. This Field Note documents the moment resolution mechanics became baked into the core of model reasoning, why wobble is part of that transition, and how defaults shift from marketing outcomes to architectural inevitabilities in a procurement-ready AI era.